23.06.2021

What's the difference?

Managed vs. unmanaged Kubernetes

We’ve noticed an increasing interest in Kubernetes (K8s) when speaking to techies and even more so when speaking to our clients. So in this blog article, we’re asking the question of what managed and unmanaged Kubernetes actually is.

When it comes to Kubernetes (K8s), we’ve noticed that the biggest knowledge gap is often found in one of the fundamental questions – namely, whether you want to use managed or unmanaged Kubernetes. We want to change this!

In this blog post we cover the following topics

Kubernetes overview

For this blog post, we will assume that you have a basic understanding of Kubernetes (K8s). However, we do still want to have a quick look at how Kubernetes actually works and in particular, how it interacts:

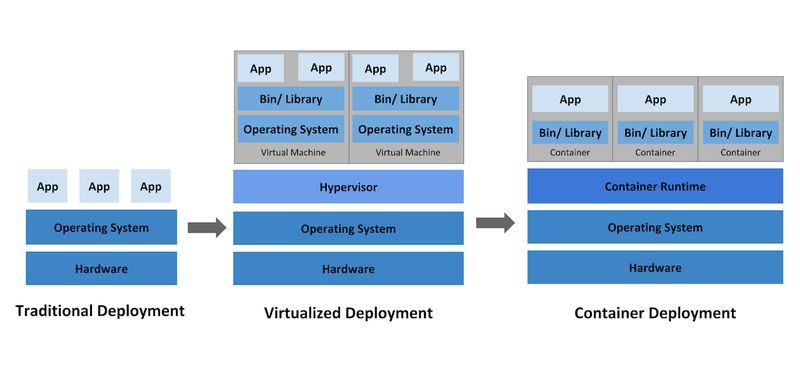

At the bottom of the graphic, there are technically no changes; just ‘like before’, virtual servers are still being used to run the code. Kubernetes acts as a kind of abstraction layer above the virtual servers – in this case, they’re called nodes and they are combined to a cluster (see diagram below by Kubernetes.io). This means that as a developer and operations specialist, I don’t really need to worry about which of the nodes my application ends up running on. I only need to teach Kubernetes the framework of my application in the form of manifest files and subsequently, K8s will ensure that a suitable node is used.

So effectively, the direct interaction with virtual machines is covered by Kubernetes, so I don’t need to deal with it myself. In the K8s manifest, a number of things are specified, including the container image and its version as well as the command which is to be run. What’s more, you can also find other things here, such as the resource requests and limits – i.e. the minimum requirements that CPU and memory have to fulfill in order for the application to run successfully or the maximum value the application is allowed to take up.

Unmanaged Kubernetes

The word ‘unmanaged’ in the term ‘unmanaged Kubernetes’ refers to the fact that you have to deal with the installation and maintenance of Kubernetes yourself – essentially, you have to manage Kubernetes on your own. Primarily, this will be necessary if a hosting with a cloud provider is not possible or not wanted.

Below is an example of a standard, albeit shortened, procedure of the manual installation:

First, the K8s nodes – i.e. virtual machines – have to be created. In this example, we’ll assume there are 3 virtual servers: one for the so-called master node and two for the so-called worker nodes. The master node controls the worker nodes which means that the K8s software that is installed on the master node ultimately decides on which worker node an application is run.

Next, the software packages required need to be installed and configured. Among others, kubeadm will be required to initialise the master node and also to assign the worker nodes to the master one. With this step, you essentially create the Kubernetes cluster which is now technically ready to use.

Next, the K8s manifests can be installed directly or via applications – using helm, for example.

As an alternative to installing all the individual components required, it would also be possible to use microk8s by Canonical, for example. This is often used to simulate Kubernetes for the local development. For some years now, however, even microk8s has been deemed as production-ready by Canonical. The advantage of this method compared to the previous one is that you’d only need to install one package.

What must be considered, though, is that ‘managed’ also refers to the maintenance. All maintenance tasks must be done manually – be it the set-up and configuration of further worker nodes due to the available resources not sufficing anymore or even just the regular updates of the Kubernetes version.

OUR KUBERNETES PODCAST

TftC E1: Kubernetes development environments

Michael and Robert are talking about the various options developers have for running remote Kubernetes development environments.

More editions of our podcast can be found here:

Show me moreManaged Kubernetes

In contrast, managed Kubernetes describes a Kubernetes installation which is made available by a provider or which can be configured via a provider. Providers and their services include the Google Kubernetes Engine (GKE), Amazon’s AWS Elastic Kubernetes Service (EKS) and IONOS’ managed Kubernetes.

The degree of the management can vary. In many cases, there’s ‘only’ the option to have a Kubernetes infrastructure provided – meaning, to have a cluster created as well as having the number and specification of the worker nodes configured. That’s already a lot more convenient than unmanaged Kubernetes. Usually, a web interface or a command line interface is provided for the interaction. On top of this, further worker nodes can be added, or the Kubernetes version can be conveniently updated.

Right at the top of the ‘managed’ range, you’ll find the managed Kubernetes offer by Canonical, among others. With this set-up, the entire Kubernetes infrastructure is installed and maintained by the provider. With such an offer, the operational part is reduced to the creation of the Kubernetes manifests or Helm charts.

In the upper range of the ‘managed’ services, Google has GKE Autopilot on offer. With this one, the nodes are automatically scaled according to the resources required by the application. Here I can also limit my responsibilities to the creation of the Kubernetes manifests or Helm charts as a developer of operations specialist. We will be having a closer look at the GKE Autopilot in a future blog post.

Summary

In our experience, most projects and companies will be better off with managed Kubernetes. Thanks to the variety of providers, a lot of hosting requirements can be covered. Depending on how much it’s managed, you can benefit from various conveniences. One of the main benefits is undoubtedly that you don’t need to bother with the maintenance of the clusters – instead, some of this can be automated or you can conveniently deal with it via a web interface or CLI.

If there are explicit requirements of on-premise hosting or valid reasons to run Kubernetes in your own data centre, then there’s no getting around unmanaged Kubernetes.

Good to know: When it comes to Unikube, the issue of ‘managed vs. unmanaged Kubernetes’ isn’t even a problem in the first place. Whether in its entirety or only partially managed, unmanaged or even just simulated locally with Unikube, it doesn’t matter how the K8s cluster was installed.